It has been almost a month and I am finally getting around to writing up the Perseid experience, even as a quickly wrote up Pre-Perseid the day after. This post documents my August 12, 2007 Perseid experience.

My wife and daughters headed home to LA mid-afternoon, as a friend of the family was arriving the next day, too early for a return trip after staying up most of the night as I was planning. I set up out on the patio by the master bedroom. I had a lawn chair, my 12×90 binoculars, a table, and my C-8 (still without drive motors, back to the dark ages!). I also set up my FM2 on a tripod. I had some ISO 160 and ISO 400 professional color print film.

Many people make meteor observing a science, and set themselves up to get many photos, and record accurate meteor counts, etc. That was not my intent. I wanted to see as many meteors as I could, do some visual observing so I wouldn’t go nuts by myself out there, and see if I could get some interesting pictures. I think I succeeded on all accounts. But I did not get a super picture or any real scientific data.

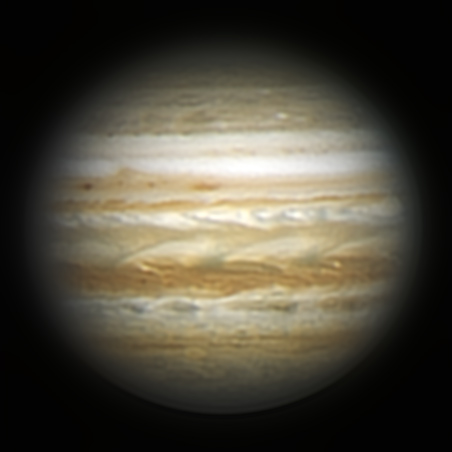

I had everything set-up at about 8:50 pm (all times PDT). I spent the first hour focused on observing, going after globular clusters and double stars. I was using my trusty Celestron guide to the sky. I saw M80, M10, M12, and NGC 6293. I picked out at least 6 double star pairs. This was mixed with meteor watching. There were several bright grazers with long tails during the first hour of observing.

Moving into the 11:00 to midnight hour, the pace of meteors picked up. During that hour, I was fairly dedicated to meteor watching and recording, and saw a Perseid about once every other minute. They would come in bunches and a few non-Perseids were mixed in with the Perseids. I distinguished the non-Perseids based on their direction. The meteor shower is named after constellation that is the are of the apparent source of the meteors. The Perseids come from Perseus, in the northern sky this time of year, just below Cassiopeia. Any meteor that did not move generally from north to south I considered not a Perseid.

There were many bright trails during this hour. Things slowed down around midnight. I took a look at M31, the Andromeda galaxy, and then looked at the Double Cluster in Perseus. It was fantastic, a double clump of stars. Very impressive. The midnight to 1:00am hour was much slower. Only about 20 in the hour — one every several minutes, with this count probably being low because I stepped away several times. At 1:00am I put away the telescope.

After 1:00am the meteors seemed to come in clumps. I’d go several minutes and see none, then get a bunch. I recorded about 45 meteors between 1:00am and 2:30. I went in at 2:30. I know that the more serious observers out there will properly tell me that it was just getting started, but I was too tired to keep looking. I had accomplished my observing goals.

And then there was the camera. I had been trying all sorts of different exposures. I was shooting with a 35mm f1.4 lens. My exposures were a mix of whatever came to mind and when I realized that I had left the shutter open. I also did not look carefully at the f-stop and took a number of shots with the aperture shut down, which makes no sense when you want more light. I caught many planes. There is a major jet pathway that goes more-or-less over Hemet, which is north of us. So from our perspective, they are going over Cahuilla peak. Right where the Perseids would be. I did catch one Perseid.

The meteor is in the upper right of the image. You can clearly see Cassiopeia and make out the Andromeda galaxy in the lower center right. I also got a very nice star trails image.

I really like the colors in the stars. I will be getting a piggy-back device so that I can take long-exposure very wide-field images without trails. This image has shown me what is possible up there in the mostly dark skies of the Anza valley.

Altogether, a successful meteor shower watch. I now know what to expect and can plan a more professional watching and imaging session for the next shower. Perhaps the Geminids in December. It will be chilly, but it could be nice.

Like this:

Like Loading...