I have been using a new planetary imaging camera for the last several months. I have a DMK DFK21AU04 640×480 USB 2.0 camera. I originally bought a firewire camera but was able to swap it for a USB version, much more convenient since my laptop does not have power to the firewire port. I also purchased a color camera because I am not yet up to getting a full filter wheel set-up to do RGB planetary imaging. Yes, you could call that lazy.

One struggle I have had is choosing from the multiple choices of video format and codec. The Imaging Source’s software gives you many choices. I learned the hard way that some of the codecs cannot be read directly from Registax. That requires reading the files in VirtualDub and saving them as BMPs via the export function. This is an extra step I’d like to avoid.

Now there is an advantage to going to BMPs. Registax appears to have a limit that prevents it from reading more than a 2,000-frame AVI. There is no limit to the BMP processing, you just drag the list of however many thousand images you have right into the Registax window and it works fine. This has allowed me to do some nice Saturn imaging that would not have been as good if I had to use fewer images and allowed me to process some captures where I had shot over 2,000 frames before I was aware of this consistent limit.

IC Capture lets you set the video size and color format with three settings and supports a whole bunch of codecs for compression. Being lazy, I went with the default which is video size and color of YUY2 and a compression codec of DV Video Encoder. Of course this could not be read by Registax, but I used my workaround through VirtualDub and all was well, or so I thought.

Last Friday I took and processed this image of Saturn.

Not the greatest Saturn image, but OK. There’s another story on the processing of the image, but we’ll get to that in another post. One problem though. I posted the image in the Bad Astronomy / Universe Today forum and Mike Salway noted that it looked a bit squished. He was right. Here is a corrected version of the image.

Apparently the DV encoder makes the image widescreen, stretching the 640 dimension to 480. I finally did some research and found that the recommendation was never to use compression. So that sent me into experimentation mode.

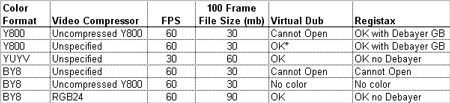

The recommended format for imaging is Y800. Y800 appears black and white but debayering will reveal the colors. In Registax on the first screen, select additional options, use debayer and GB under the Debayer options. Full instructions are available from the Imaging Source blog. Rather than try to communicate this in text, here is a table with my results (FPS=Frames per second).

So the bottom line is this. If you need fewer than 2,000 frames with 60 fps, go with Y800/Unspecified and go straight to Registax. If you’re OK with 30 fps, but need lots of frames, go with YUYV/Unspecified and run it through VirtualDub to get BMPs. If you need 60 fps and lots of frames, use BY8 and RGB24. It uses a lot of disk space, but you get your frames and your frame rate.

Comments and corrections greatly desired.