In my post on darks and biases below, I combined a small and a large number of frames to create master frames and see how much difference could be seen in the master frames. I have now repeated the experiment in PixInsight and have some more quantitative result.

The overall result is the same: The difference in the bias frames is quite noticeable, the difference in the darks is hard to discern, and any difference in the final calibrated light frames is hard to see at all.

On further analysis, these results make sense. A bias is the base level readout from the camera and is subject to read noise. The base level readout — the bias — is a very low signal. The average bias pixel value is about 0.00162 on a 0-1 scale or about 106 on a 16 bit basis with maximum values of 0.00678 (0-1) or 444 (16-bit). With a low signal, the read noise would be more visible and an the reduction in noise from the increase in integrated frames is more visible.

With darks, we are capturing both the bias and the dark noise. The dark noise is greater than the bias (otherwise we would not need to take darks). The dark has an average value of 0.00174 / 114 (same units as above) which is about 7% higher than the bias, but has a maximum value of 0.859 / 56,300. With this greater signal and larger variance, it is harder to see the difference from a larger number of frames than with the bias. The dark noise itself is noise, and a larger number of frames gives us a better measure of that noise. That increase in quality I do not think is visible. It should contribute to a better final image. In the images below, I have stretched the dark and bias frames identically, so there is some difference visible between the low and high number of integrated frames.

Finally, with the light frames, the signal is much greater than the dark with an average value of 1.5 greater after calibration and 2.4 times greater before calibration. In addition, my frames are dithered so that the camera based noise (like dark noise and bias) are spread out among the subframes and minimized during image integration through data rejection.

First, let’s look at the biases. I combined 48 and 148 frames to make two different master bias frames. The frames were averaged without normalization or weighting. I used a Winsorized Sigma Clip with a high and low sigma factor of 3.0. The combination yielded the following noise evaluation results:

Integration of 48 images:

Gaussian noise estimates: s = 1.677e-005

Reference SNR increments: Ds0 = 1.1788

Average SNR increments: Ds = 1.1784

Integration of 148 images:

Gaussian noise estimates: s = 9.742e-006

Reference SNR increments: Ds0 = 1.4379

Average SNR increments: Ds = 1.4362

To put the numbers in perspective, the overall noise is 42% lower and the SNR is 22% higher, assuming I am interpreting the numbers correctly.

Master bias integrating 48 frames:

Bias from 48 frames

Master bias integrating 148 frames:

Bias from 148 frames

I discovered a very nice feature in PixInsight while performing this experiment. In the original test, I used 44 and 80 frames to create the master darks. PixInsight will report how many low and high pixels are rejected for each frame being integrated. When I looked at the log, I saw that the first frame had a very high number of pixels being rejected:

1 : 1150170 35.777% ( 516 + 1149654 = 0.016% + 35.761%)

At the time I started taking the flats, the “simple cooler” command I used in CCDCommander did not wait for the camera to cool fully before taking the darks. It got down to temperature fairly quickly, but apparently not quickly enough. The images below exclude that dark frame from the masters.

The master dark integration settings were identical to the ones used for the biases. Here are the statistics from the dark integration:

Integration of 43 images:

Gaussian noise estimates: s = 2.499e-005

Reference SNR increments: Ds0 = 2.8991

Average SNR increments: Ds = 2.6725

Integration of 79 images:

Gaussian noise estimates: s = 2.064e-005

Reference SNR increments: Ds0 = 3.2427

Average SNR increments: Ds = 3.2073

The noise decreases by 17% and the SNR increases by 20%, again if I am interpreting the statistics correctly. It is somewhat ironic that the signal in this case is noise. But it is that noise we are trying measure by taking darks.

Master dark integrating 43 frames:

Dark from 43 frames

Master dark integrating 80 frames:

Dark from 79 frames

Of course, the whole reason for calibration is to create a better light image. I processed my light frames from NGC 925 taken in early December using the two different sets of masters. Different masters were used to calibrate both lights and flats. I used dark optimization during calibration. All lights were aligned to the same frame using nearest neighbor resampling. I used an average combine, multiplicative output normalization, scale+zero offset normalization for rejection, and Winsorized sigma clipping with a clip setting of 2.2 on both high and low pixels.

Integration of 36 images using 48 bias and 43 darks:

Gaussian noise estimates: s = 4.955e-005

Reference SNR increments: Ds0 = 3.7736

Average SNR increments: Ds = 3.6726

Integration of 36 images using 148 bias and 79 darks:

Gaussian noise estimates: s = 4.947e-005

Reference SNR increments: Ds0 = 3.7751

Average SNR increments: Ds = 3.6744

This is a 0.16% decrease in noise and a 0.05% increase in SNR. Not a huge statistical improvement and, to my eye, not visible in the final image. The two lights below are stretched to the noise floor or beyond and it is difficult to say that one is better than the other. Both are stretched identically. The image artifact in the upper right showed up on my second night of data gathering and I suspect it is from frost in the camera.

NGC 925 processed with bias master 48 and dark master 43:

NGC 925 calibrated with a smaller number of Bias and Darks

NGC 925 processed with bias master 148 and dark master 79:

NGC 925 calibrated with a large number of Bias and Darks

There is no great illuminating conclusion to this analysis. Certainly, take all the darks and biases you can. There is a real improvement in the bias quality and they don’t take a lot of time. I am going to try to get another 100 darks to see if I get any improvement, but I feel once you are in the 40s the increase in quality is small. I may do this experiment with my color frames, which are binned 2×2 and have much lower signal than the luminance I used here.

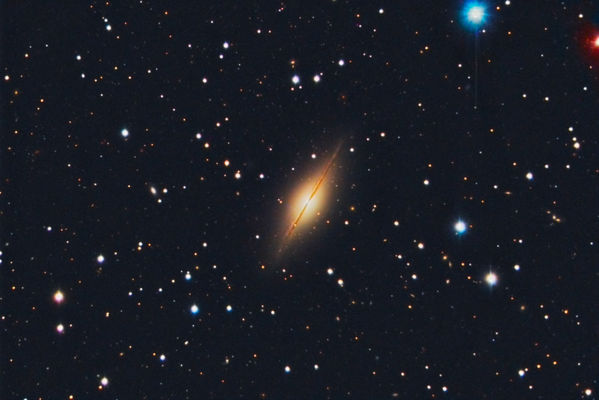

Finally, here is the high-number calibrated NGC 925 stretched to look decent, just so everyone knows it is a nice image. Check out the fully processed LRGB NGC 925 in the gallery, too.

NGC 925 with a normal stretch

Like this:

Like Loading...

You must be logged in to post a comment.